This is a cohort-based course and the lessons will start unlocking on July 14th, 2025.

Building AI applications that are genuinely useful involves more than just hitting an LLM API and getting back stock chat responses.

The difference between a proof-of-concept and a production application lies in the details.

Generic chat responses might work for demos, but professional applications need appropriate outputs that align with specific requirements.

In a professional environment code is (ideally) tested, metrics are collected, analytics are displayed somewhere.

AI development can follow these established patterns.

You will hit roadblocks when trying to:

- Implement essential backend infrastructure (databases, caching, auth) specifically for AI-driven applications.

- Debug and understand the "black box" of AI agent decisions, especially when multiple tools are involved.

- Ensure chat persistence, reliable routing, and real-time UI updates for a seamless user experience.

- Objectively measure AI performance moving beyond subjective "vibe checks" for improvements.

- Manage complex agent logic without creating brittle, monolithic prompts that are hard to maintain and optimize.

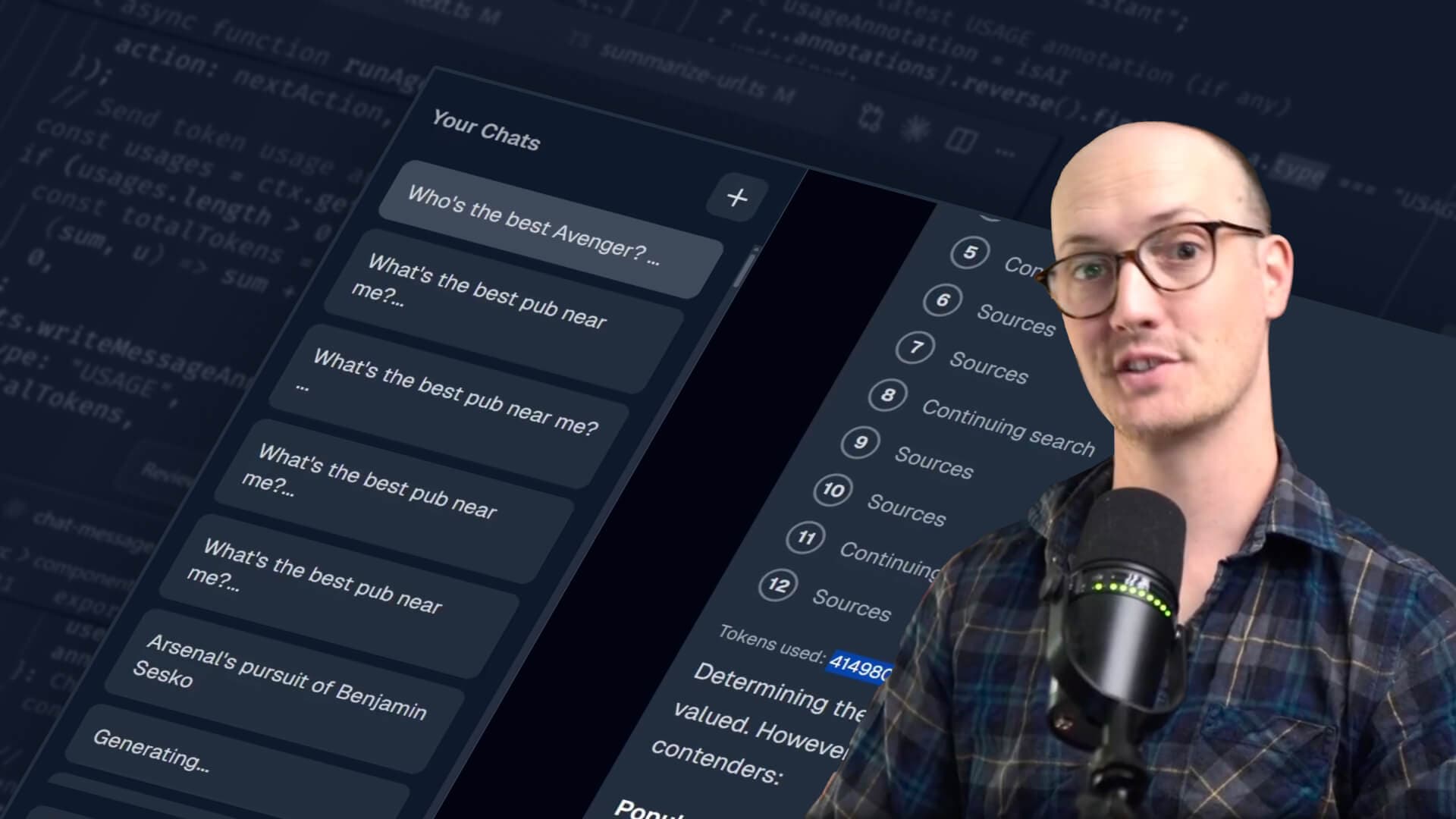

In this course you will build out a "DeepSearch" AI application from the ground up to help you understand and implement these patterns and ensure a production-ready product.

Days 00-02: Getting Started

You’ll start with a project that is already built out using Next.js TypeScript (of course), PostgreSQL through the Drizzle ORM, and Redis for caching.

The first couple of days you will implement fundamental AI app features building out a Naive agent. You’ll start by hooking up an LLM of your choice to the Next.js app using the AI SDK and implement a search tool it can use to supplement its knowledge when conversing with users.

Chats with an LLM don’t save themselves so you will also save conversations to the database as well.

Days 03-05: Improve Your Agent through Observability and Evals

The first real differentiator between a vibe-coded side project and a production-ready product you can feel confident putting in front of customers is observability and evals.

You need to know what is going on with your LLM calls as well as need an objective means to judge the output that LLMs are producing.

This is exactly what the next few days are about. You’ll hook up your app to LangFuse and get familiar with looking through traces produced by the application.

Once you can see what your LLM is doing, now’s the time to test inputs and outputs of your agent using evals. Evals are the unit test of the AI application world and we’ll start by wiring up Evalite which is an open-source, vitest-based runner. You’ll learn about what makes good success criteria and build out your evals including implementing LLM-as-a-Judge and custom Datasets specific to your product. We’ll also discuss how you can capture negative feedback intro traces that you can feed into your app to make it better.

Days 06-07: Agent Architecture

Up until this point, your app is driven by an increasingly large prompt that will become unwieldy and impossible to test and iterate on when your app complexity grows.

We’ll take these next two days to revisit the over-all architecture of our application and refactor it to better handle complex multi-step AI processes. The primary idea behind this refactor is called Task Decomposition where you allow a smart LLM model determine the next steps to take based on the current conversation but allow room to hand off actual action to focused or cheaper models.

Days 08-09: Advanced Patterns

The last two days we will evaluate what our deepsearch agent is and how we can further optimize output. You’ll learn the differences between “Agent” and “Workflow” and see how in this use-case we’ll lean harder into workflow patterns to build a more reliable product.

In ai-land, this pattern is called the evaluator-optimizer loop which effectively means that if the agent has enough information, it will answer the question presented but if it doesn’t it will search for more information. With this pattern defined we’ll embrace and optimize around its design.

By the end of this cohort you will be confident in building out AI applications that are reliable and will improve through iteration and user feedback. LLM models and the whole AI field is changing rapidly so understanding these fundamentals will give you a foundation to navigate building applications for years to come.