What Are Tokens?

Tokens are the fundamental building blocks that help Large Language Models (LLMs) process text. Understanding them is essential, especially since you're billed based on token usage.

What Are Tokens?

Tokens are simply numbers that represent how the LLM "thinks" about the text you provide. The process of converting text into tokens is called encoding.

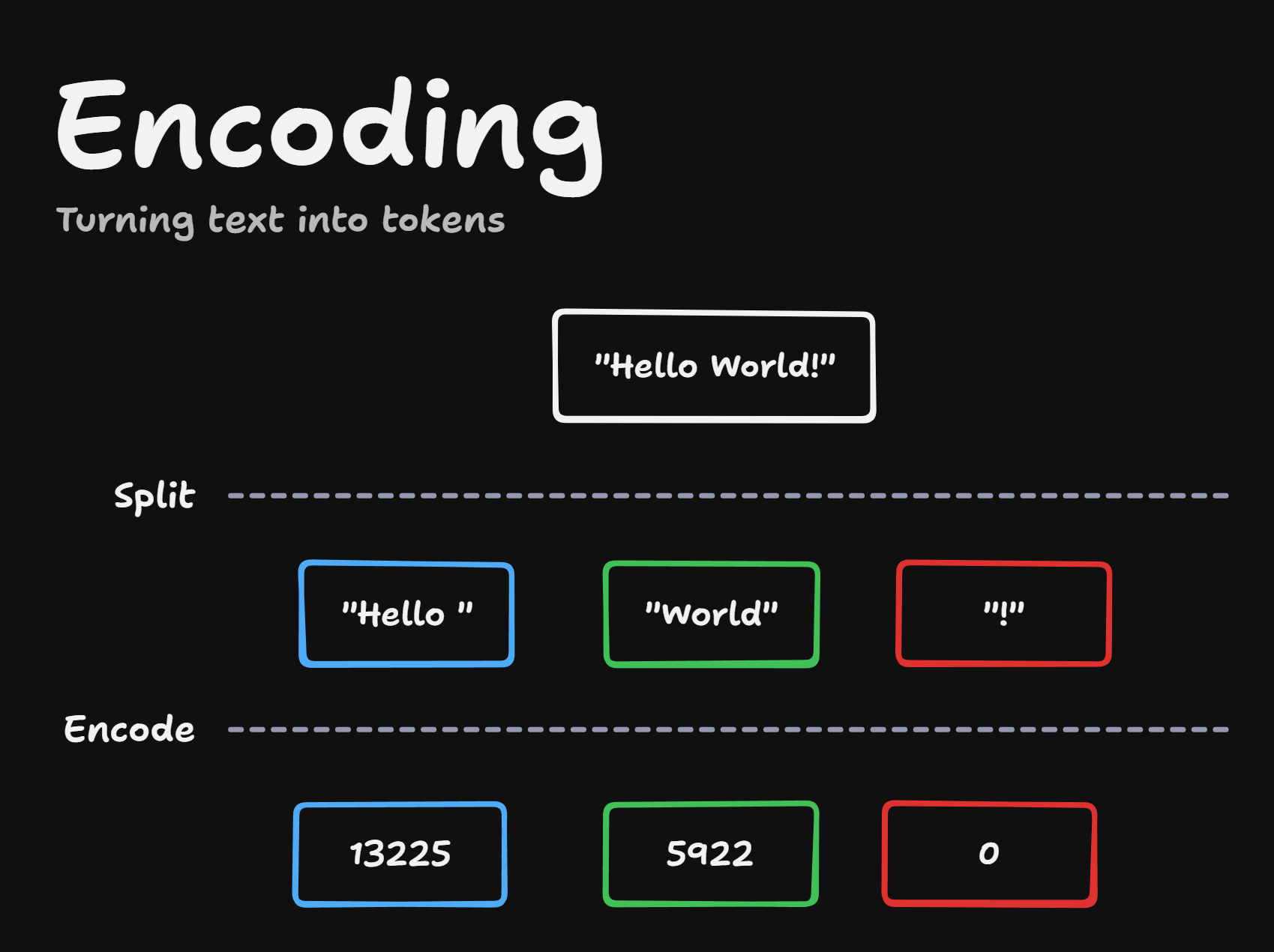

The tokenization process works in two parts:

- The tokenizer splits text into tokens it recognizes

- These tokens are converted into numbers

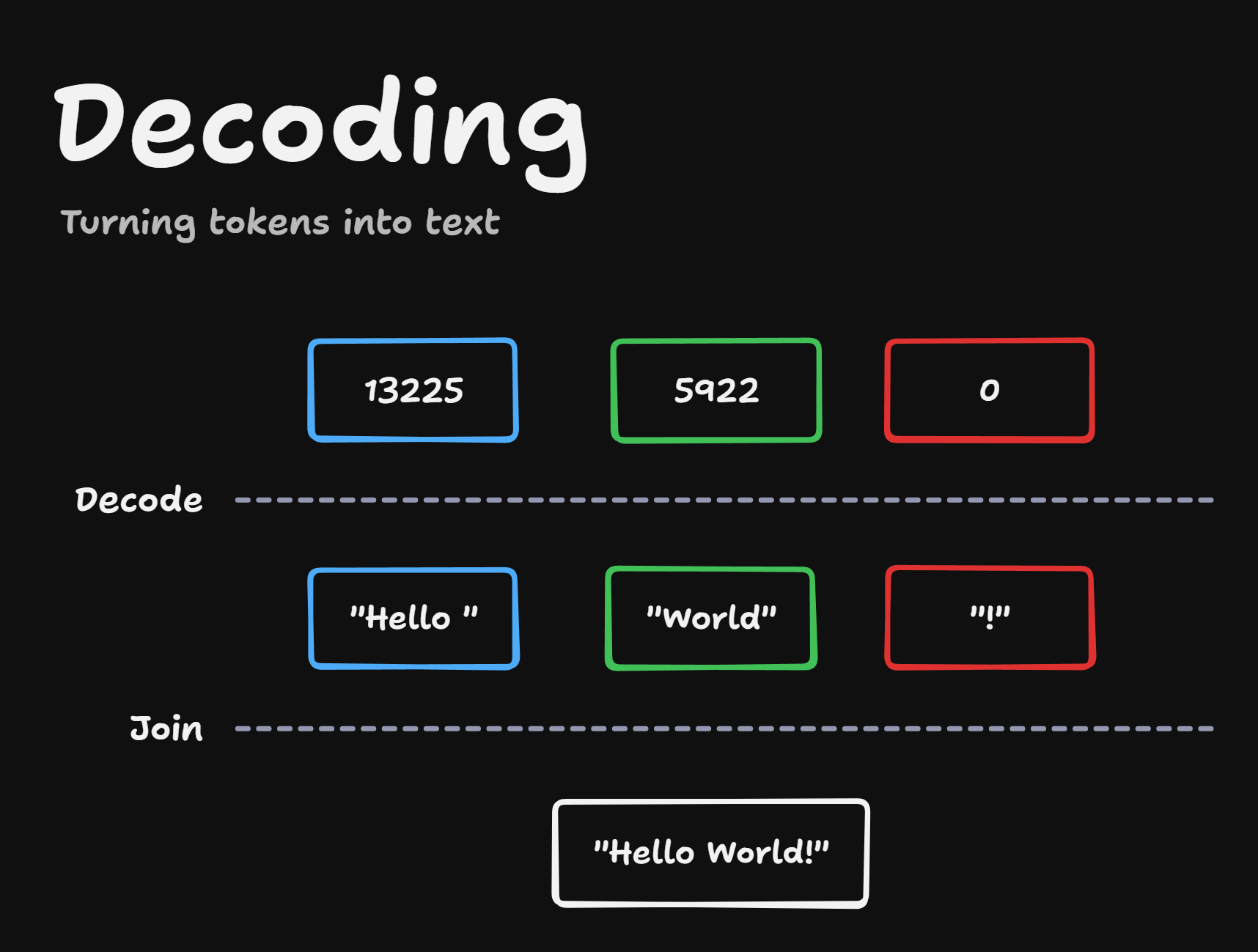

Decoding is the reverse process:

- Numbers are converted back into text tokens

- The tokens are joined together to form the output

The LLM Process Flow

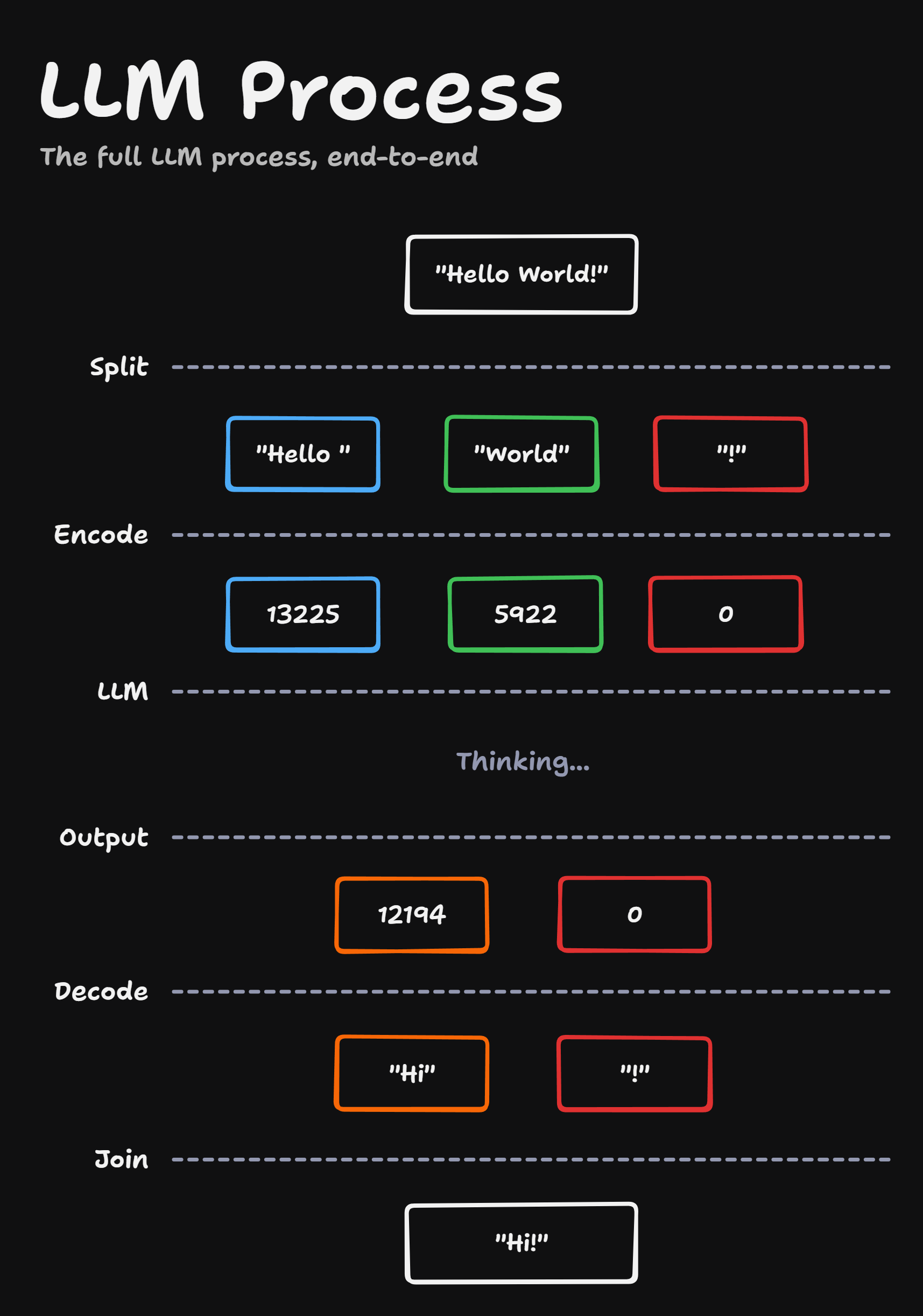

The complete LLM process looks like this:

- Tokenizer encodes your input text into tokens

- LLM processes your tokens

- LLM produces output tokens

- Output tokens are decoded back into readable text

To clarify, input tokens include:

- Your conversation history with the LLM

- System prompts

- Tool definitions

Output tokens are what the LLM sends back as a response.

You're billed for both input and output tokens, typically at different rates. One way to save money is to design your prompts to generate fewer output tokens.

How Tokens Are Created

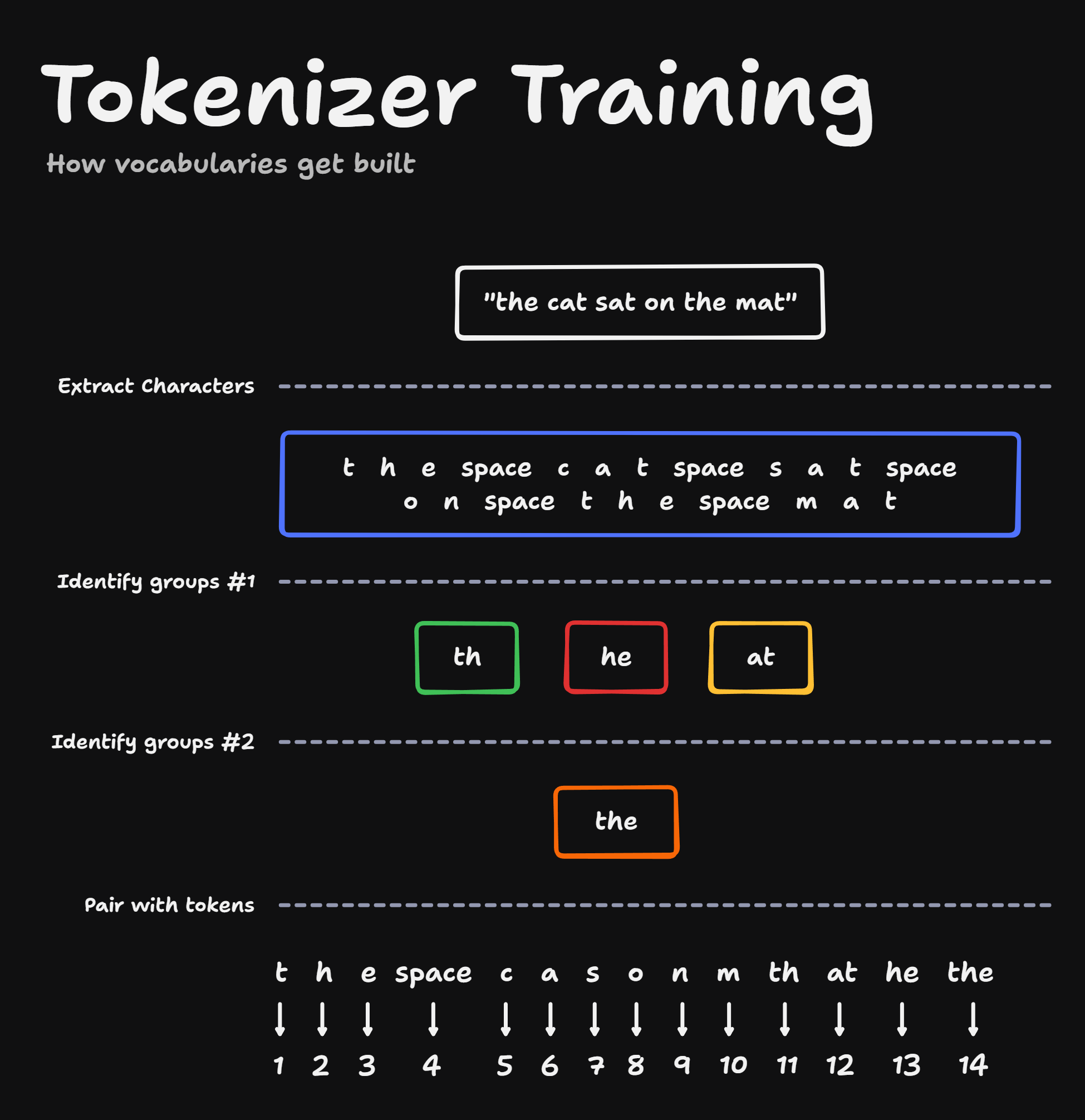

The tokenization process starts with a large corpus of text - similar to what's used to train the LLM itself. Let's imagine a tiny corpus consisting of just one sentence: "the cat sat on the mat."

First, all individual characters are extracted:

T H E space C A T space S A T space O N space T H E space M A T

Each of these characters becomes its own token in the vocabulary.

Next, common groupings of characters are identified:

- "TH" appears in "the" (twice)

- "HE" appears in "the" (twice)

- "AT" appears in "cat", "sat", and "mat"

Each of these groupings also gets assigned its own token.

Then, groups of groups are identified - like "TH" + "HE" creating "THE" (the word "the"), which gets its own token.

Vocabulary Size Matters

The goal is to create a large vocabulary of tokens because larger vocabularies can split words into fewer tokens, making processing more efficient.

For example, a vocabulary size of 1,000 tokens might split "understanding" into 5 tokens. A vocabulary size of 50,000 tokens might split it into 3 tokens, and a vocabulary size of 200,000 tokens might split it into 2 tokens.

Having a larger vocabulary means you can split words into fewer tokens, making processing more efficient.

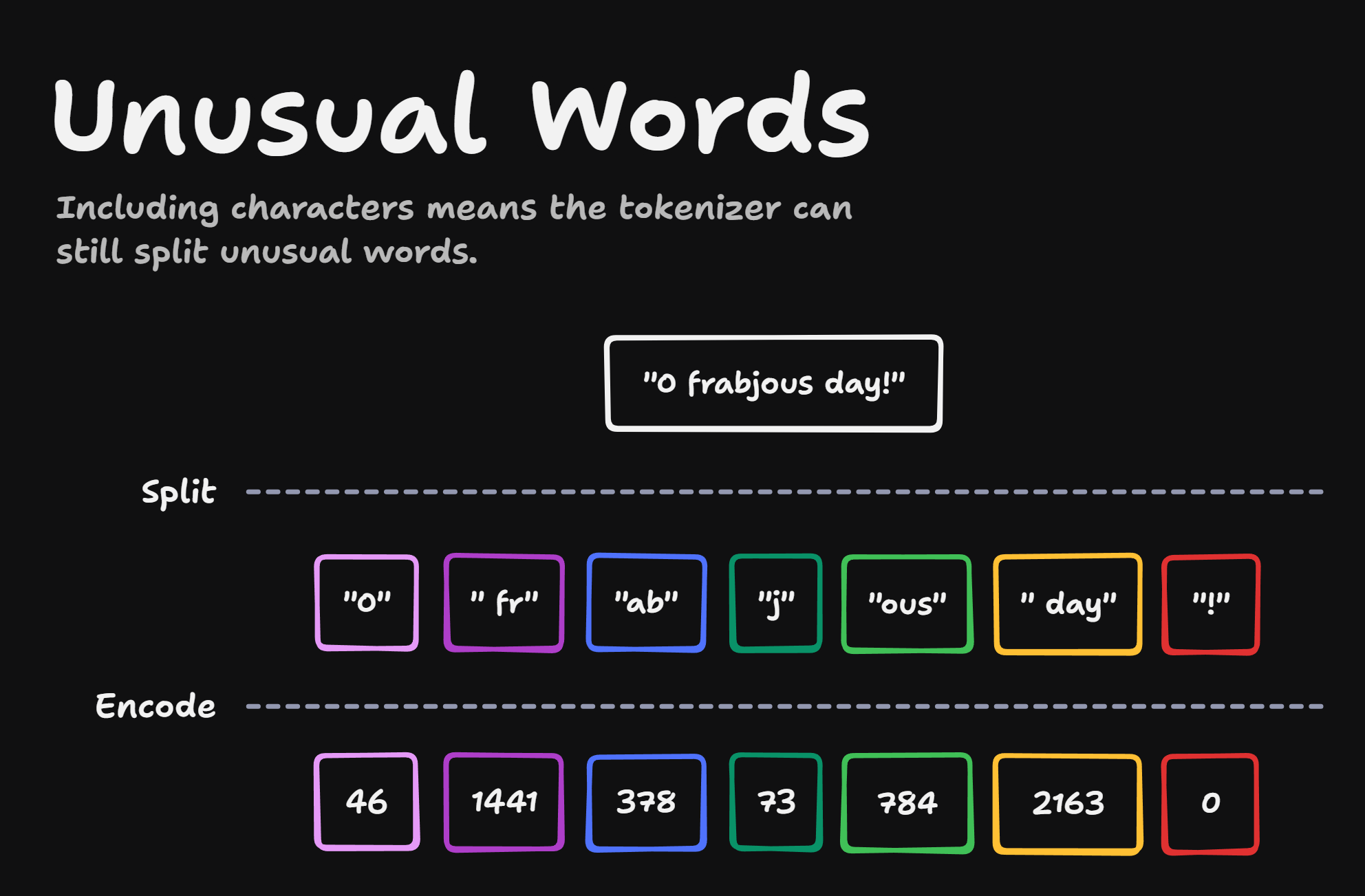

Handling Unusual Words

The tokenizer struggles with uncommon words. For example, "O Frabjous Day" from Lewis Carroll's poem gets split into many tokens because "Frabjous" is a made-up word that doesn't appear frequently in the training corpus.

We can see that it turns it into 7 tokens - more than we'd expect from only 15 characters.

Final Thoughts

I hope that helps demystify tokens a bit. I found the tiktokenizer playground really useful for understanding this stuff.

Let me know if you have any questions - and what else would you like me to cover next?

Matt